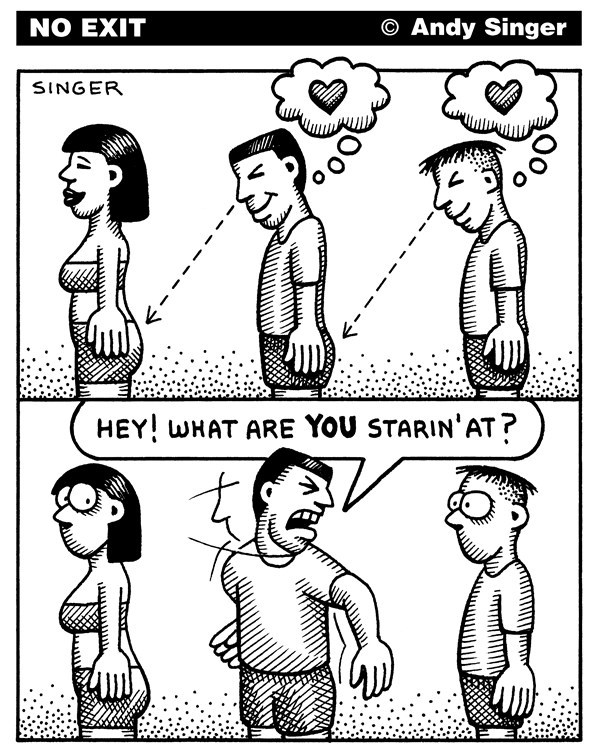

Part of the controversy surrounding affirmative action and other systems which give preferential treatment to minority groups comes from the ideal notion that people are judged on their merits – and not their gender/race/etc [6]. In such an ideal world, for instance, a female scientist would be equally likely to be hired, given tenure, or accolades as an identical male scientist.

Science likes to bill itself as a meritocracy, in which scientists are evaluated only their work. A lot of the unease many scientists have about preferential treatment is that it goes against that ideal of meritocratic science [5]. So, it’s worth asking: is a female scientist equally likely to be hired/tenured/etc as an identical male scientist?

Probably the best study design for probing this type of question are correspondence tests. These refer to studies where you describe either a female individual or a male individual to a group of participants – keeping everything but gender (or race, ethnicity, etc) constant – and see if participants respond differently to to the woman/man.

Correspondence tests are generally easier to run than audit studies, where you hire actors to be identical to one another except for gender/race/etc. Both types of studies are useful for identifying discrimination against particular groups. Another approach is to pair real male and female scientists with equal on-paper qualifications and see whether they are equally likely to be given tenure. This approach, however, suffers from the problem of pairing: are that female and male scientist really identical except for on-paper qualifications?

In this post, I’ll be describing the results of three correspondence tests looking at discrimination against women in science. These three studies are also the only such studies that I know of to have been published since the 90s. (There’s an older one from the 70s that is now a bit dated.)

The effect of gender on tenurability

Published in 1999, Steinpreis et al [1] ran a correspondence test looking at the effect of gender on tenure. They sent out hundreds of questionnaires to academic psychologists (randomly selected from the Directory of the American Psychological Association). The paper describes two studies, one on tenurability, and one on hirability. Over a hundred questionnaires were returned on the tenurability study of the paper.

Each questionnaire contained a CV, and participants were asked to rate the CV: would they tenure this individual? Participants were told only that this was part of a study on how CVs are reviewed for tenure decisions.

The CV was that of a real psychologist, who had been given early tenure – a random half of the participants got a version of the CV with the name changed to “Karen Miller” – the other half of the participants got “Brian Miller”. (The questionnaire also asked if the participant recognized any of the names on the CV – such participants were removed from the analysis.)

Each questionnaire had a hidden code on the sheet that indicated the gender and institution of the participant it was sent to – this way the researchers did not have to ask their participants for their gender (which could bias them by getting them consciously thinking about gender).

The results? “Brian Miller” and “Karen Miller” were equally likely to be offered tenure – but participants were also four times more likely to write cautionary comments about “Karen” than “Brian”, such as “I would need to see evidence that she had gotten these grants and publications on her own” and “We would have to see her job talk”.

A caveat of the study, however, is that this was the CV of a psychologist who had been offered early tenure – in short, this was an unambiguously competent applicant. In correspondence studies used in non-science contexts, ambiguity has been repeatedly found to play a large role: a minority applicant who is ambiguously qualified for a job (or loan) is less likely to receive the job than a majority applicant – but in the face of unambiguous qualifications, biases are muted [2].

The effect of gender on hirability for a tenure-track position

The Steinpreis et al paper contains another correspondence test, looking at the effect of gender on applying for tenure-track jobs. They used roughly the same approach for this as they did for the other correspondence test, also with over a hundred participants.

Here, the CV was that of the same individual, but at an earlier stage in her career; the dates were shifted to make it seem recent. Like the other study, participants either saw the CV as that of “Karen Miller” or “Brian Miller”. Participants were asked if they would hire the applicant, and what starting salary they would suggest.

Unlike the tenure study, significant differences were seen between the ratings of “Karen” and “Brian”. “Brian” was significantly more likely to be hired, and he was significantly more likely to be rated as having adequate research experience, along with adequate teaching experience and adequate service experience. He was offered a larger starting salary.

Both female and male participants demonstrated these biases – there was no effect of the participant’s own gender in any part of Steinpreis et al’s two studies.

The effect of gender on hirability for a lab manager position

Thirteen years after the Steinpreis et al study, Moss-Racusin et al ran a correspondence study looking at the effect of gender on the hirability of a canditate for a lab manager position [3]. The candidate here is somebody with a Bachelors degree – this is a lower-position job than the tenure-track job in the Steinpres et al paper.

Moss-Racusin et al sent job packets to 547 tenure-track/tenured faculty in biology, chemistry and physics departments at American R1 institutions, found through the websites of those departments. 127 respondents fully completed the study.

Each job packet contained the resume and references of the applicant – who was randomly assigned either the name “Jennifer” or “John”. Unlike the Steinpres et al study which used a real scientist’s CV, this job packet was created specifically for the study. The applicant was designed to reflect what the authors described as a “slightly ambiguous competence”.

“John”/“Jennifer” was “in the ballpark” for the position but not an obvious star [3]. They had two years of research experience and a journal publication – but a mediocre GPA and mixed references.

Similar to the Steinpreis et al study on hirability, “John” was statistically significantly more likely to be hired than “Jennifer” and was offered a larger starting salary. He was also rated as more competent than “Jennifer”. Participants also indicated a greater inclination to mentor “John” than “Jennifer”. While the differences in the ratings between “John” and “Jennifer” were not huge, the effect sizes were all moderate to large (d = 0.60-0.75).

And similar to the Steinpreis et al study on hirability, both female and male participants were equally likely to rate “John” above “Jennifer”.

The effect of gender on perceived publication quality and collaboration interest

In the two papers I’ve described above, the correspondence studies all used job application materials for the “correspondence”. Knobloch-Westerwick et al [4] took a look at gender discrimination through a different lens: by having participants rate conference abstracts whose authors were rotated as female or male.

Participants in this study were graduate students (n=243), all of whom were in communications programmes; abstracts were all taken from the 2010 annual conference of the International Communication Association. Participants had not attended this conference.

Unlike the Steinpreis et al and Moss-Racusin et al papers, participants evaluated multiple “correspondences” – they each saw 15 abstracts. The participants rated each article on a scale of 0-10 for how interesting, relevant, rigourous, and publishable the abstract was. Participants also rated abstracts on how much they would like to chat to the author, and potentially collaborate with them.

Overall, they found that abstracts with male authors were rated as having statistically significantly higher scientific quality than when these abstracts were presented with female authors. Abstracts with male authors were more likely to be deemed worthy of talking to – and collaborating with – the author. The gender of the participant did not have an effect on the ratings they gave.

Given the other studies I’ve described here, this probably isn’t surprising. What I found quite neat about the paper is they then broke it down by subfield. There’s two steps to this analysis. Before the ran the main study, they ran a preliminary study with assistant professors, which involved these participants rating whether a given abstract fell into a female-typed subfield, or into a male-typed subfield. Knobloch-Westerwick et al then rigged the abstract selection in the main study to show equal numbers of abstracts from these three categories. Female-typed subfields turned out to be communications relating to children, parenting and body image; male-typed subfields were political communication, computer-mediated communication, news, and journalism. Health communication, intercultural communication were rated as gender-neutral.

In female-typed subfields, female authors were rated higher than male authors. In male-typed subfields, the male authors were rated higher than female authors. And in the gender-neutral areas, female and male authors were rated equally. (It should also be noted that the female-typed abstracts were rated less favourably than gender-neutral and male-typed abstracts.)

This brings us to role congruity theory. Role congruity theory looks at gender through the social construct of gender roles – gender roles not only represent beliefs about the attributes of women and men, but also normative expectations about their behaviour [4]. What we saw in the subfield results is that people who are role-incongruous are discriminated against.

Per the article, “Role congruity theory postulates that bias against female scientists originates in differences between a female gender role and the common expectations towards individuals in a scientist role.” [4] In short, where women go against societal gender norms, they’re viewed less favourably.

Discussion

From the articles here, two things emerge for whether we’ll see discrimination against women in a correspondence study: whether they’re role-congruent or role-incongruent, and the level of ambiguity in the study.

When faced with an extraordinary tenure candidate, it doesn’t matter whether they’re role-congruent or role-incongruent. Bias is more likely when the applicant is ambiguously qualified (which is really most of the time). Often, this will mean that a female scientist needs to be more qualified to get the same job: a male scientist can get it for being “good enough” – but the woman needs to be amazing.

The three papers together paint a fairly clear picture that subtle bias occurs against women in science – and that female academics are just as prone to bias as their male colleagues.

While there are a number of issues with affirmative action and other preferential treatment systems for minorities (see [2]) – the notion that scientists are rated independent of gender isn’t one of them. These biases exist and add up quickly over an entire discipline – and over the course of an individual’s life.

References:

Moss-Racusin, C. A., Dovidio, J. F., Brescoll, V. L., Graham, M. J., & Handelsman, J. (2012).

Science faculty’s subtle gender biases favor male students.

Proceedings of the National Academy of Sciences,

109(41), 16474-16479.