Highlights from ICER 2013

A few weeks back, I attended ICER 2013 at UC San Diego. Afterwards, I went up to San Francisco and had some adventures there (and at Yosemite), and then spent time in Vancouver seeing friends before coming home.

ICER this year was a solid conference, as always. I liked that this year things reverted to having two minute roundtable discussions after every talk, before the Q&A. It makes for a much more engaging conference.

|

| All hail the UCSD Sun God, who benevolently oversaw the conference. |

It turns out that many of the same stumbling blocks that people have when learning programming languages are the same as when they learn HTML. Syntax errors, figuring inconsistent syntax, learning that things need to be consistent – even just learning that what you see is not what you get.

In compsci we tend to overlook teaching HTML since it’s a markup language, not a programming language. But what we deal with in compsci is formal languages, and the simplest ones are the markup languages. Playing with a markup language is actually a much simpler place to start than giving novices a fully-fledged, Turing complete language.

Other research talks of note:

- Peter Hubwieser et al presented a paper on categorizing PCK in computer science that I liked; I’d love to see more work on PCK in CS, and look forward to seeing subsequent work using their framework.

- Colleen Lewis et al performed a replication study looking at AP CS exams. I love replication studies, so I may be a bit biased towards it :) In the original paper, they found that the first AP CS exam’s scores were strongly predicted by only a handful of questions – and those questions were ones like:

int p = 3

int q = 8

p = q

q = p_

what are the values of p and q?_

In Colleen’s paper, they found that the newer AP CS exams are much more balanced: things are not predicted by a small number of questions. Good to see! - Robert McCartney et al revisited the famous McCraken study that found that students can’t program after CS1, and found that students can, but that educators have unrealistic expectations for what students would know by the end of CS1.

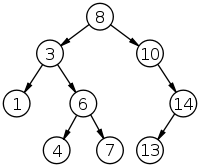

- Michelle Friend and Robert Cutler found that grade 8 students, when asked to write and evaluate search algorithms, favour a sentinel search (go every 100 spots, then every 10, then then every 1 spots, etc) over binary search.* Mike Hewner found that CS students pick their CS courses with really no knowledge of what they’ll be learning in the class. It’s one of those findings that’s kind of obvious in retrospect, but we educators really tend to mistake our students as thinking they know what they’re in for. Really, a student about to take a CS2 class doesn’t know what “hash tables” or “graphs” are coming in. Students pick classes more around who the prof is, the class’ reputation, and time of day.

Finally, Michael Lee et al found that providing assessments improve how many levels people will play in an educational game that teaches programming. It’s a neat paper, but the finding is kind of predictable given the literature on feedback for students.

I much more enjoyed Michael’s ICER talk from two years ago. He found in the same educational game that the more the compiler was personified, the more people played the game. Having a compiler that gives emoticon-style facial expressions, and uses first person pronouns (I think you missed a semi-colon vs. Missing semicolon) makes a dramatic difference in how much more people engage with learning computing. That’s a fairly ground-breaking discovery and I highly recommend the paper.

The conference, was of course, not only limited to research talks:The doctoral consortium, as always, was a great experience. I got good feedback, about a dozen things to read, as well as awesome conference-buddies!

- The lightning talks were neat. My favourite was Ed Knorr’s talk on teaching fourth-year databases, since third-year and fourth-year CS courses are so often overlooked in the CS ed community. I also liked Kathi Fisler’s talk on using outcome-based grading in CS.

- The discussion talks were interesting! Elena Glassman talked about having students compare solution approaches in programming, which nicely complements the work that I presented this year.* The keynote talk also talked about the importance of comparing-and-contrasting. My takeaway from the keynote, however, was a teaching tip. Scott, the speaker, found that students learnt more from assignments if they were asked upon hand-in to grade themselves on the assignment. (Students would basically get a bonus if their self-assessment agreed with the TAs’ assessment.) It’s such a small thing to add onto the hand-in process, and adds so much to how much students get out of it. I’ll definitely have to try this next time I teach.

Overall, a great time, and I’m looking forward to ICER 2014 in Glasgow!