A Seven-Step Primer on Soft Systems Methodology

I’m currently TAing for CSC2720H Systems Thinking for Global Problems, a graduate-level course on systems thinking. In class today we talked about soft systems thinking (SSM), an approach which uses systems thinking to tackle what are called “wicked problems“. I thought I’d outline one approach to SSM, as it’s useful to CS education research.

Step 1: Identify the domain of interest

Before you can research something, you should first decide what your domain is. What topic? What system are you studying? For example, “teaching computer science” could be your starting point, as could “climate change”.

Chances are you’re looking at a wicked problem. Conklin’s definition of wicked problems are that:

- The problem is not understood until after the formulation of a solution.

- Wicked problems have no stopping rule.

- Solutions to wicked problems are not right or wrong.

- Every wicked problem is essentially novel and unique.

- Every solution to a wicked problem is a ‘one shot operation.’

- Wicked problems have no given alternative solutions.Because you’re looking at a domain which doesn’t have a clear definition or boundaries, you’ll first want to immerse yourself in the domain. One trick is to draw “rich pictures“, which are essentially visualized streams of consciousness.

You should also think about what perspectives you bring into this domain. What biases and privileges do you have going into this? Why are you interested in this domain? What do you have to gain or lose here?

Step 2: Express the Problem Situation

A good expression of a situation should contain no value judgments. As a heuristic, ask yourself if it’s possible for somebody to not see your statement as a problem.

For example: “Global surface temperatures on Earth have been increasing since the late 19th century” is a statement some people may not even see as a problem, whereas “Climate change will destroy our way of life” presents climate change as a problem, rather than as a situation.

The goal of expressing a situation is so that you can then identify the different ways people would frame this as a problem. If you express the situation as inherently problematic, this will narrow the problem frames that you’ll think of.

It may take you a few drafts to come up with a situation statement you’re happy with. That’s fine; SSM is an iterative process. You may find in later steps you’ll want to come back here (or to earlier steps) and revise your work.

Step 3: Identify Different Problem Frames

How you frame a situation will affect the types of analysis and solutions you’ll come up with. Your next step is to think of the different ways the situation could be framed, before you pick which one to proceed with. I’ll give three examples.

Situation: A large fraction of undergraduate students fail in first-year CS.

Some problem frames:

- The context is problematic. Students are overburdened in all their classes, and have a difficult time adjusting to university study.2. The curriculum structure is problematic. CS1 material builds on itself to an extent that other first-year classes don’t. If you fall behind, it can be impossible to catch up.

- The amount of content is problematic. We pack too much material into CS1 for students to properly absorb and understand.4. The pedagogy is problematic. We just aren’t teaching CS1 effectively.

- The affordances are problematic. We teach CS1 using programming languages which don’t reflect how non-programmers reason about computation (e.g. while loops vs. until loops)

It isn’t a problem. The failure rates, while high, are consistent with other first-year courses.7. Computer science is inherently difficult for humans to learn.

Situation: Computer science is not available in every high school in Canada.

Some problem frames:If the general population doesn’t learn about CS, they won’t be able to properly participate in a 21st century democracy. Issues of privacy and security are poorly understood but necessary for democracies to address.

- Not enough young Canadians are equipped with the necessary computational skills for the workforce.3. CS is generally only offered at affluent, urban, schools. This causes racial and class disparities in access to CS, and in turn, to lucrative jobs.

- Fewer girls than boys take high school CS; if more girls could take high school CS it could reduce the gender gap.5. There aren’t enough qualified high school teachers to teach CS in every school.

- Schools are underfunded and overburdened.

This isn’t a problem, schools should be focusing on other topics instead!

Situation: The percentage of women completing undergrad CS programmes hasn’t changed since the wide-scale creation of women in computing initiatives.

Some problem frames:The initiatives are being undermined by the same external forces that created the gender disparity in CS.2. The initiatives are themselves faulty. They reinforce gender norms and the subtyping of “female computer scientist” != “computer scientist”.

- The initiatives are having a positive impact, but not in ways this measurement can capture. For example, they improve the personal experiences of the women in the field, but don’t change the numbers.

- Without the initiatives, the percentage would have decreased. Before the initiatives, the percentage was decreasing; it has since flatlined.

Step 4: Study the problem frames and pick one

At this point you’ll want to start doing a literature review. As you review the literature you’ll find different papers take different problem frames (explicitly or implicitly).

Each problem frame will lead to different approaches to studying and “solving” the problem. Let’s return to the CS1 failure example:

Situation: A large fraction of undergraduate students fail in first-year CS.

- The context is problematic. Students are overburdened in all their classes, and have a difficult time adjusting to university study.

Possible solutions: increase financial aid, reduce course load 2. The curriculum structure is problematic. CS1 material builds on itself to an extent that other first-year classes don’t. If you fall behind, it can be impossible to catch up.

Possible solution: reconfigure the CS1 material to be breadth-first, like they have at Harvey Mudd3. The amount of content is problematic. We pack too much material into CS1 for students to properly absorb and understand.

_Possible solutions: _spread it out amongst more classes, get CS1 in all high schools4. The pedagogy is problematic. We just aren’t teaching CS1 effectively.

Possible solutions: use peer instruction and/or pair programming 5. The affordances are problematic. We teach CS1 using programming languages which don’t reflect how non-programmers reason about computation (e.g. while loops vs. until loops) Ultimately for your research you’ll need to pick a single problem frame to work within. At this point you should choose one and justify why you’re picking that one rather than the other ones. Once you’ve picked your problem frame you’ll want to carefully review and analyse the relevant literature.

Step 5: Arena of Action

Think about your problem frame like you’re framing a photograph. Who or what is in the foreground? In the background? Not in the frame at all?

Or, if you see your situation as an arena: who is in the arena? Who is being affected by the situation? Who has the power to change the situation? Who benefits from this framing, and who loses out?

CATWOE Analysis can come in here: who are the customers/clients? The actors? The transformation process? What’s the world view underlying this? Who “owns” or controls this? What environmental constraints are there?

When I took a class on policy analysis, some questions we asked were: “Why was this policy adopted? On whose terms? Why? On what grounds have these selections been justified? Why? In whose interests? Indeed, how have competing interests been negotiated?” as well as “Why now?” and “What are the consequences?” (from Taylor et al, 1997, “Doing Policy Analysis”)

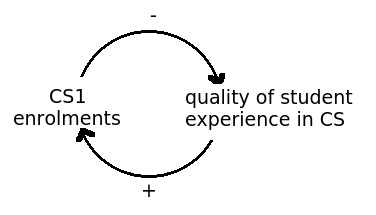

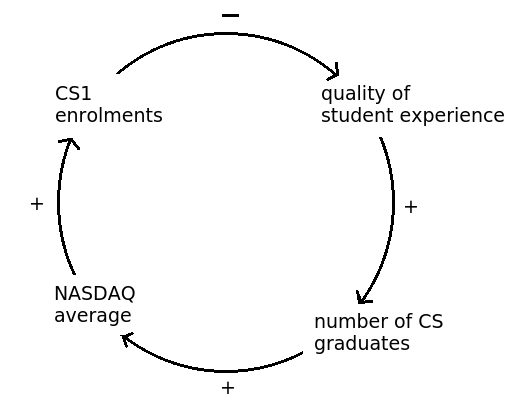

Step 6: Theorize/model the relevant system

Having identified your problem frame, you’ll want to return to the system at hand. Depending on the problem, you may want to model it and/or theorize about it based on the existing literature.

Once you have a model, compare it to real world evidence. You may have to design and perform empirical studies in order to accomplish this.

Step 7: Identify possible/feasible changes to the situation, and take action

Like it says. Soft systems methodology was created for action research, a style of research intended to create social change. In this paradigm, research is bad if it doesn’t help improve the situation.

Your goal in doing this research is to identify changes to the situation which are both possible and feasible. You should identify who has the power to enact these changes. Challenge yourself: contact these people and share your findings. Advocate for your proposed solution.